AI Governance Maturity Assessment: The Proven Framework to Evaluate Your Organization in 2025

- Bob Rapp

- 2 days ago

- 5 min read

Organizations deploying AI at scale face a critical question: How mature is your governance infrastructure? With AI regulations tightening globally and operational risks mounting, a structured maturity assessment provides the roadmap for sustainable AI deployment.

An AI governance maturity assessment systematically evaluates your organization's capabilities across strategic, operational, and ethical dimensions. Unlike ad-hoc audits, these assessments provide benchmarked frameworks that identify specific improvement pathways and measure progress over time.

Why AI Governance Maturity Matters More Than Ever in 2025

The regulatory landscape has fundamentally shifted. The EU AI Act requires comprehensive risk management systems. The UK's AI governance principles demand demonstrable oversight. Organizations without mature governance frameworks face mounting compliance costs, deployment delays, and reputational risks.

Beyond compliance, mature AI governance drives business outcomes. Organizations with transformative governance capabilities deploy AI 40% faster while maintaining stronger risk controls. They attract better talent, secure enterprise partnerships, and access capital markets more effectively.

The gap between AI innovation and governance capability continues widening. Most organizations operate reactively: addressing issues after they emerge rather than preventing them systematically. Maturity assessments provide the diagnostic framework to close this gap before it becomes a competitive disadvantage.

The Five Core Dimensions of AI Governance Maturity

Comprehensive assessments evaluate organizations across interconnected capabilities that span technical, operational, and strategic domains.

Strategy and Vision

Mature organizations integrate AI governance into board-level strategic discussions. This dimension evaluates whether AI features in quarterly business reviews, strategic planning cycles, and investment decisions. Organizations score higher when governance considerations influence product roadmaps, market expansion plans, and partnership strategies.

People and Expertise

Governance requires specialized capabilities that most organizations lack internally. This dimension measures whether you have dedicated AI ethics officers, legal specialists familiar with algorithmic regulation, and technical teams trained in bias detection methodologies. Mature organizations develop these capabilities systematically rather than relying on external consultants indefinitely.

Processes and Analytics

Standardized workflows distinguish mature organizations from reactive ones. This dimension evaluates your model development lifecycle, testing protocols, and deployment approval processes. High-scoring organizations have documented procedures for bias testing, performance monitoring, and incident response that teams follow consistently.

Ethics and Oversight

Technical capability without ethical frameworks creates systemic risks. This dimension measures your fairness monitoring systems, transparency mechanisms, and stakeholder feedback processes. Mature organizations maintain AI ethics committees with clear decision-making authority and regular review cycles.

Culture and Collaboration

AI governance spans organizational boundaries. This dimension evaluates cross-functional alignment between technical teams, legal departments, and business units. Mature organizations foster governance cultures where teams proactively identify risks rather than waiting for formal reviews.

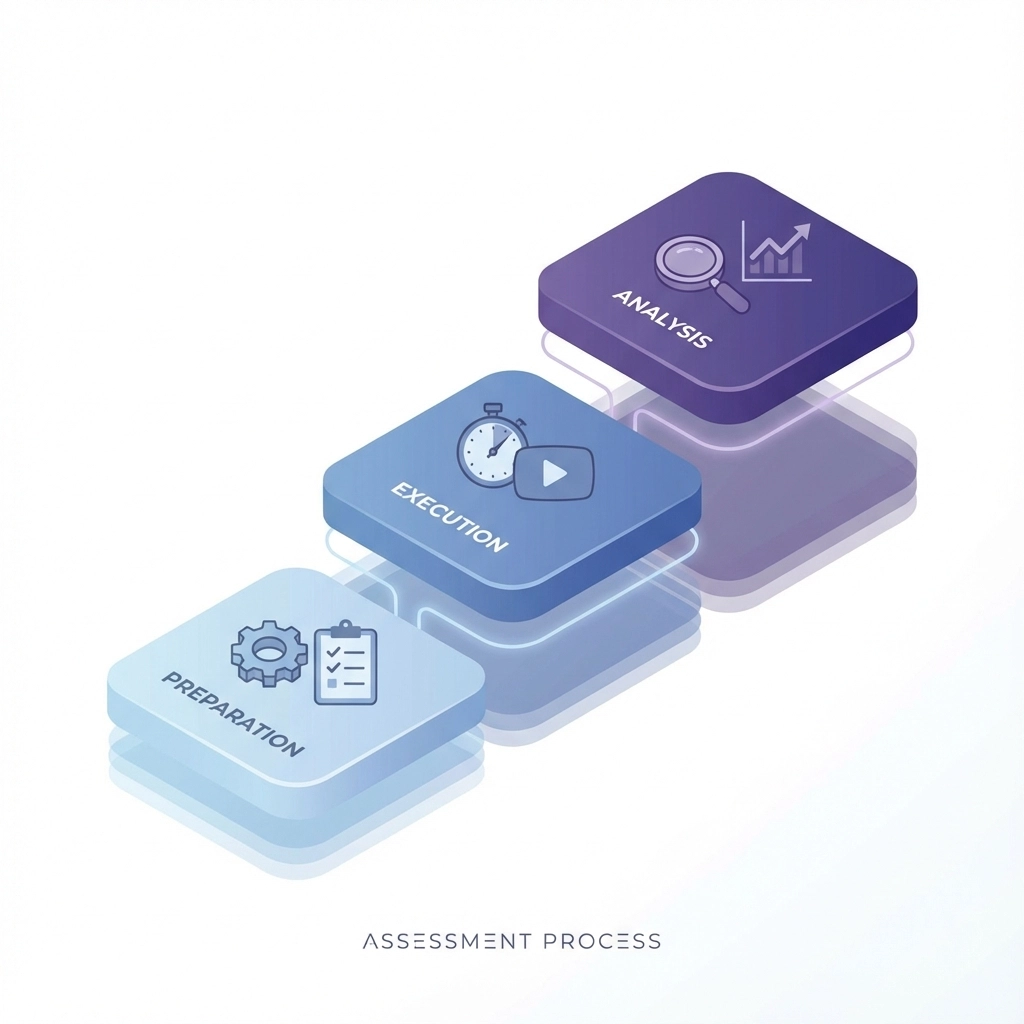

How to Conduct Your Maturity Assessment

Effective assessments require structured approaches that generate actionable insights rather than generic scores.

Preparation Phase

Assemble a cross-functional assessment team including technical leaders, legal counsel, and business stakeholders. Schedule dedicated sessions rather than distributing surveys individually. Governance maturity emerges through collective discussion, not individual responses.

Gather supporting documentation including current AI policies, deployment procedures, and incident reports. Review recent audit findings and regulatory correspondence. This context enables more accurate self-evaluation and identifies documentation gaps.

Assessment Execution

Use structured scoring frameworks that provide clear evaluation criteria. Rate each dimension on consistent scales that distinguish between reactive, proactive, and transformative capabilities. For example:

Reactive (1.0-1.6): Issues addressed after emergence with minimal systematic prevention Proactive (1.7-2.3): Established policies and committees with regular review cycles Transformative (2.4-3.0): Strategic integration with continuous monitoring and improvement

Conduct candid discussions about current capabilities without defensive positioning. The assessment's value depends on honest evaluation of gaps and strengths. Document specific examples that support scoring decisions to enable targeted improvement planning.

Analysis and Action Planning

Calculate dimension-specific scores to identify priority areas. Dimensions scoring below 2.0 require immediate attention with quarterly improvement reviews. Higher-scoring areas provide foundation capabilities that support organization-wide maturity development.

Scoring Model Example

Organizations typically implement three-point scales with specific behavioral indicators:

Strategy Integration: Does AI governance feature in board discussions monthly (3), quarterly (2), or annually (1)? Are governance considerations documented in strategic planning processes?

Risk Management: Do you maintain current AI risk registers (3), periodic assessments (2), or reactive incident responses (1)? Are risk mitigation strategies tested and validated regularly?

Stakeholder Engagement: Do you operate AI ethics committees with decision-making authority (3), advisory groups (2), or informal consultation processes (1)? Are stakeholder feedback mechanisms documented and responsive?

Calculate weighted averages across dimensions to determine overall maturity levels. Organizations typically target proactive capabilities (2.0+) before pursuing transformative approaches.

Common Assessment Pitfalls to Avoid

Most organizations make predictable mistakes that compromise assessment effectiveness and improvement planning.

Overestimating Current Capabilities

Teams frequently confuse aspirational policies with operational reality. Having documented procedures doesn't indicate consistent implementation. Score based on demonstrated capabilities with supporting evidence rather than intended practices.

Focusing Exclusively on Technical Dimensions

Governance maturity spans organizational capabilities beyond technical controls. Strong model validation processes mean little without corresponding legal frameworks, stakeholder engagement mechanisms, and strategic integration. Balance technical and operational assessments.

Conducting Individual Rather Than Collective Assessments

Governance emerges through organizational alignment, not individual expertise. Individual surveys miss crucial collaboration gaps and cultural dynamics. Facilitate group discussions that surface different perspectives and promote shared understanding.

Treating Assessment as One-Time Activity

Maturity assessment provides snapshots of current capabilities, not permanent organizational characteristics. Conduct annual assessments to measure improvement progress and identify emerging gaps as AI capabilities evolve.

AI Governance Maturity Assessment Template

Use this structured checklist to evaluate your organization across key dimensions:

Strategy and Vision

AI governance discussed in board meetings (monthly/quarterly/annually)

Governance considerations integrated into strategic planning

Clear AI vision statement with ethical boundaries

Executive sponsorship for governance initiatives

People and Expertise

Dedicated AI governance roles and responsibilities

Regular training programs for AI teams

Legal expertise in algorithmic regulation

Ethics committee with diverse representation

Processes and Analytics

Documented model development lifecycle

Standardized bias testing procedures

Incident response and escalation protocols

Regular governance effectiveness reviews

Ethics and Oversight

Fairness monitoring systems operational

Transparency mechanisms for stakeholders

Clear decision-making authority structures

Regular ethical review cycles

Culture and Collaboration

Cross-functional governance alignment

Proactive risk identification practices

Open communication about governance challenges

Continuous improvement mindset

Rate each area on 1-3 scales with specific examples supporting your evaluation. Focus improvement efforts on dimensions scoring below 2.0 while maintaining strengths in higher-scoring areas.

Building Long-Term Governance Maturity

AI governance maturity develops through systematic capability building rather than reactive policy creation. Organizations succeeding at scale treat governance as competitive advantage rather than compliance burden.

Effective maturity frameworks provide roadmaps for sustainable AI deployment that balances innovation speed with responsible oversight. Regular assessments enable organizations to measure progress, identify emerging gaps, and maintain governance effectiveness as AI capabilities continue evolving.

For organizations beginning their governance maturity journey, comprehensive assessment frameworks and implementation resources provide structured pathways for capability development. The key lies in systematic evaluation, honest gap identification, and sustained improvement commitment across organizational boundaries.

This post was created by Bob Rapp, Founder aigovops foundation 2025 all rights reserved. Join our email list at https://www.aigovopsfoundation.org/ and help build a global community doing good for humans with ai - and making the world a better place to ship production ai solutions

Comments