EU AI Act 2026: 7 Compliance Mistakes You're Making (And How to Fix Them Before It's Too Late)

- Bob Rapp

- 3 days ago

- 5 min read

With August 2026 approaching fast, organizations worldwide are scrambling to comply with the EU AI Act's high-risk system requirements. Yet our analysis of current compliance efforts reveals that most companies are making critical mistakes that could lead to significant penalties, operational disruption, and market access issues.

The stakes are high: non-compliance can result in fines up to €35 million or 7% of global annual turnover. More importantly, organizations that fail to meet these requirements risk being locked out of the EU market entirely.

Here are the seven most common compliance mistakes we're seeing: and practical steps to fix them before it's too late.

Mistake #1: Delayed AI System Mapping and Classification

What's happening: Most organizations haven't conducted comprehensive AI system inventories or properly classified their systems according to the risk tiers defined in Annex III of the EU AI Act.

Why this matters: Without accurate classification, you can't determine your specific compliance obligations. High-risk AI systems have extensive requirements that take months to implement properly.

How to fix it immediately:

Conduct a complete AI mapping exercise across all business units

Document every AI system your organization uses, develops, or procures

Classify each system by risk level: prohibited, high-risk, general-purpose AI (GPAI), or minimal-risk

Create a centralized registry that tracks system purpose, data sources, and decision-making scope

Assign ownership and accountability for each system's compliance

Mistake #2: Inadequate Risk Assessment and Documentation

What's happening: Companies are treating risk assessment as a checkbox exercise rather than building robust, ongoing processes. Many assume they can prepare technical documentation closer to the August deadline.

Why this matters: The EU AI Act requires comprehensive technical documentation that demonstrates systematic risk assessment, not just compliance paperwork. Regulators will scrutinize your actual risk management processes.

How to fix it:

Implement structured risk assessment frameworks that evaluate bias, discrimination, and safety risks

Document training datasets, testing procedures, and evaluation results for each high-risk system

Create audit trails showing how you identify, assess, and mitigate AI-related risks

Establish regular risk review cycles with clear escalation procedures

Maintain evidence of risk mitigation effectiveness over time

Mistake #3: Neglecting Data Governance Requirements

What's happening: Organizations haven't implemented robust data governance frameworks to ensure their training, validation, and testing datasets meet EU AI Act standards.

Why this matters: Poor data governance directly violates requirements for datasets to be "relevant, representative, free of errors and complete." This is a foundational compliance requirement that affects system performance and bias prevention.

How to fix it:

Establish data governance processes ensuring datasets are sufficiently representative of their intended use

Implement data quality controls to minimize errors and ensure completeness

Create documentation showing how datasets address potential biases

Develop procedures for ongoing data validation and quality assurance

Train data teams on EU AI Act-specific data requirements

Mistake #4: Missing Human Oversight Implementation

What's happening: Organizations haven't designed meaningful human-in-the-loop checkpoints for AI systems that affect safety, fundamental rights, or financial outcomes.

Why this matters: Human oversight isn't optional for high-risk AI systems: it's a core requirement. Systems must be designed to enable effective human intervention before adverse outcomes occur.

How to fix it:

Design human oversight mechanisms that allow intervention in AI decision-making processes

Establish clear workflows with human checkpoints for high-risk systems

Train oversight personnel to understand system limitations and intervention points

Create escalation procedures when human operators identify concerning AI outputs

Document how human oversight prevents or mitigates potential harms

Mistake #5: Insufficient Employee AI Literacy

What's happening: Many organizations missed the February 2, 2025 deadline for AI literacy training and still haven't implemented programs to ensure employees understand AI risk management and governance.

Why this matters: This requirement is already in effect and represents an active compliance gap. Employees involved in AI decision-making must understand AI governance principles and risk management approaches.

How to fix it now:

Immediately implement AI literacy programs for developers, compliance teams, and executives

Cover AI governance principles, risk assessment methodologies, and regulatory requirements

Provide role-specific training based on each employee's involvement with AI systems

Document training completion and comprehension testing

Establish ongoing education programs as AI governance evolves

Mistake #6: Failure to Prepare for Conformity Assessments

What's happening: Providers of high-risk AI systems haven't prepared for third-party conformity assessments or established processes to obtain EU declarations of conformity.

Why this matters: High-risk AI systems require independent conformity assessment before market entry. Without proper preparation, you'll face significant delays and potential market access issues.

How to fix it:

Identify which of your AI systems require third-party conformity assessments

Begin preparing documentation packages that assessors will need to review

Implement governance structures that meet assessment requirements (consider ISO/IEC 42001)

Establish relationships with notified bodies that can conduct assessments

Create internal processes for maintaining conformity over time

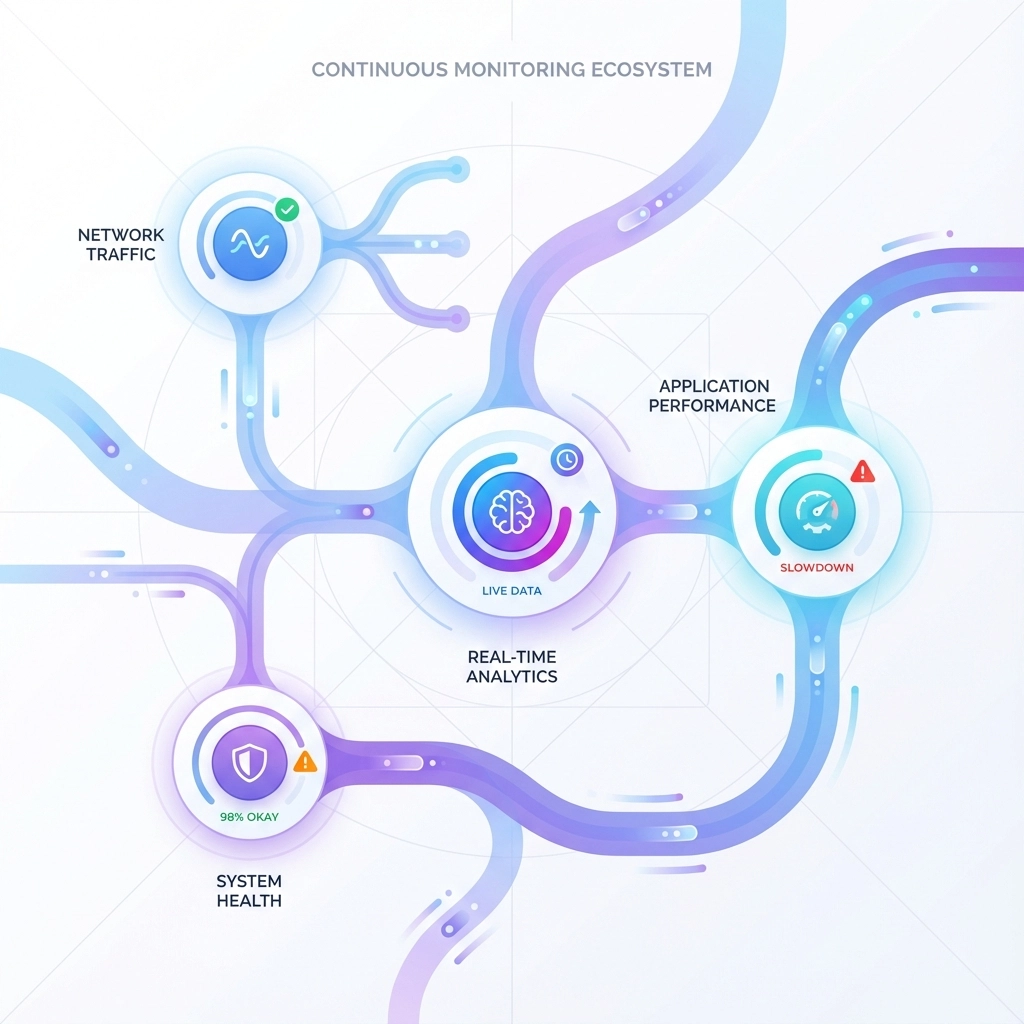

Mistake #7: Lack of Continuous Post-Market Monitoring

What's happening: Organizations are treating compliance as a pre-launch requirement rather than an ongoing operational responsibility requiring logging, monitoring, and corrective actions.

Why this matters: The EU AI Act requires continuous monitoring of AI system performance and outcomes. Post-market surveillance isn't optional: it's a core compliance obligation.

How to fix it:

Establish systems for automatic logging of AI system events and decisions

Implement ongoing monitoring to ensure systems function as intended

Create procedures for detecting and responding to performance degradation

Develop corrective action processes when compliance issues emerge

Maintain detailed records that demonstrate ongoing compliance efforts

Your 30-Day Compliance Action Plan

Week 1: Assessment and Inventory

Complete comprehensive AI system mapping

Classify all systems by EU AI Act risk categories

Identify immediate compliance gaps

Assign ownership for each system's compliance

Week 2: Risk and Documentation

Begin formal risk assessments for high-risk systems

Start building technical documentation packages

Implement data governance quality controls

Design human oversight workflows

Week 3: Training and Processes

Launch AI literacy training programs

Establish ongoing monitoring procedures

Create conformity assessment preparation timeline

Build post-market surveillance capabilities

Week 4: Integration and Testing

Test human oversight mechanisms

Validate monitoring and logging systems

Review documentation completeness

Plan for ongoing compliance maintenance

What This Means for Your Organization

The August 2026 deadline isn't just a compliance checkpoint: it's a market access requirement. Organizations that delay these implementations risk not only significant penalties but also operational disruption and competitive disadvantage.

The most successful compliance programs we've seen treat EU AI Act requirements as governance improvements rather than regulatory burdens. They build systematic approaches that enhance AI risk management while meeting legal obligations.

Don't wait until July to start serious compliance work. The organizations that begin comprehensive implementation now will have robust, tested systems in place well before the deadline: and they'll be better positioned to leverage AI responsibly in the European market.

Ready to assess your current compliance readiness? Our governance assessment tools can help you identify specific gaps and build a systematic approach to EU AI Act compliance.

This post was created by Bob Rapp, Founder aigovops foundation 2025 all rights reserved. Join our email list at https://www.aigovopsfoundation.org/ and help build a global community doing good for humans with ai - and making the world a better place to ship production ai solutions

Comments