Why 65% of Companies Are Failing at AI Governance (And How Yours Can Join the 35% That Succeed)

- Bob Rapp

- 5 days ago

- 5 min read

Most AI governance programs aren't just underperforming: they're actively blocking the innovation they're meant to protect. While exact failure rates vary by study, the pattern is clear: one in four AI project failures trace back to weak governance, and more than half of executives admit their companies have no clear approach to managing AI risk, ethics, or accountability.

The companies that crack this code don't just avoid catastrophic failures. They use governance as a competitive advantage, scaling AI faster and more safely than their peers. Here's how they do it: and how you can join them.

The Four Critical Failure Modes That Kill AI Governance

1. The "Policy Theater" Trap

Most organizations mistake writing policies for implementing governance. They produce impressive 50-page AI ethics frameworks, hold workshops on responsible AI, and create oversight committees: then wonder why their governance still feels like bureaucratic theater.

The reality: Governance without operational teeth is just expensive documentation. When developers can't quickly understand what's allowed, when risk assessments take weeks, and when approval processes have no clear timelines, teams work around governance rather than with it.

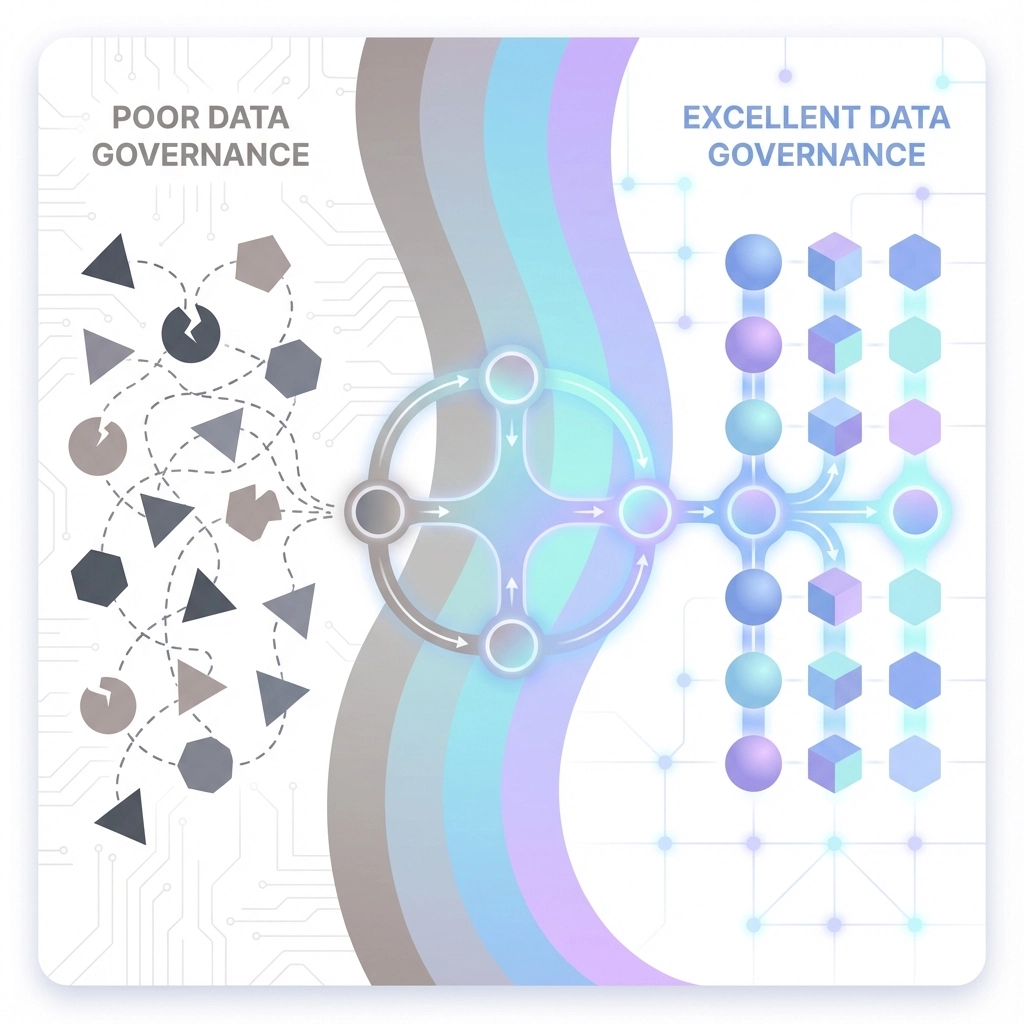

2. Data Quality Governance Collapse

76% of organizations cite poor data quality and governance as top barriers to AI success. This isn't just about having bad data: it's about lacking the governance infrastructure to maintain data quality at AI scale.

Common symptoms include:

No clear data ownership or accountability

Inconsistent data lineage and documentation

Manual data validation processes that can't keep pace

Siloed data policies across business units

No automated quality checks in AI pipelines

3. The "Shadow AI" Explosion

When governance feels like friction, innovation moves underground. Teams use personal accounts for AI tools, build models without IT oversight, and deploy solutions that bypass security reviews entirely.

The hidden cost: Organizations lose visibility into their AI risk surface while inadvertently training employees to circumvent controls. By the time leadership discovers the scope of shadow AI, it's often too late to govern it effectively.

4. Approval-Only Governance Models

Traditional governance treats AI like a one-time procurement decision: approve the use case, check the compliance box, and move on. But AI systems continuously learn and evolve, making this approach fundamentally inadequate.

The breakdown happens when:

Models drift from their original behavior patterns

Data sources change without triggering re-review

Usage expands beyond approved parameters

Performance degrades below acceptable thresholds

External regulatory requirements shift

Operating Model Gaps That Guarantee Failure

Missing Cross-Functional Accountability

Successful AI governance requires coordination between legal, risk, compliance, IT, and business teams. Most organizations assign AI governance to a single function: usually legal or IT: creating knowledge gaps and enforcement blindness.

The 35% that succeed establish governance bodies with rotating leadership, clear escalation paths, and shared metrics for success. They treat governance as a business process, not a compliance checkbox.

No Continuous Monitoring Infrastructure

One-time risk assessments can't govern systems that change continuously. Yet many organizations lack basic infrastructure to monitor AI system behavior over time, track performance drift, or alert stakeholders when intervention is needed.

Leading organizations invest in:

Automated model monitoring and alerting

Data drift detection across all inputs

Performance tracking against business objectives

Bias testing in production environments

Continuous compliance validation

Governance as Cost Center vs. Business Accelerator

Failed governance programs position themselves as necessary friction: slowing things down to prevent mistakes. Successful programs position governance as business enablement: providing clear rules, fast decisions, and confident leadership approval for AI investments.

How the Top 35% Build Governance That Actually Works

Platform-Driven Compliance

Instead of manual reviews and email-based approvals, leading organizations embed governance directly into their AI development and deployment platforms. Guardrails become invisible and automatic:

Sensitive data is automatically blocked from model training

Security and privacy controls are enforced by default

Risk assessments are built into development workflows

Compliance documentation generates automatically

Approval processes have built-in timelines and escalation paths

Risk-Proportional Governance

Not all AI use cases carry equal risk. High-performing organizations create governance frameworks that scale with risk levels:

Low-risk applications (internal productivity tools): Lightweight self-certification

Medium-risk applications (customer-facing features): Structured review with business stakeholders

High-risk applications (autonomous decision-making): Comprehensive assessment with external validation

Governance as Innovation Enabler

The most effective AI governance programs don't just prevent problems: they accelerate innovation. Clear guidelines help teams move faster, documented risk assessments build leadership confidence, and streamlined approval processes reduce time-to-market.

Key practices include:

Pre-approved AI use case templates for common scenarios

Self-service risk assessment tools with instant feedback

Clear "green light" criteria that teams can validate independently

Fast-track approval paths for low-risk innovations

Regular governance office hours for immediate guidance

Diagnostic Questions: Where Does Your Governance Stand?

Operational Readiness

Can your teams get governance guidance in under 24 hours?

Do you have automated monitoring for AI systems in production?

Can you trace the full lineage of data feeding your AI models?

Do your approval processes have defined timelines and escalation paths?

Risk Management Maturity

Can you identify all AI systems currently running in your organization?

Do you have different governance requirements based on risk levels?

Can you detect when AI system behavior drifts from expected patterns?

Do you regularly test for bias, fairness, and performance degradation?

Business Integration

Do business leaders view governance as acceleration or friction?

Are governance decisions made by people who understand the technology and the business context?

Can you measure governance ROI in terms of faster deployment and reduced risk?

Do your governance processes scale with your AI ambitions?

Your 30-60-90 Day Implementation Plan

Days 1-30: Foundation and Assessment

Week 1-2: Current State Analysis

Inventory all AI systems currently in use (including shadow AI)

Map existing governance processes and identify bottlenecks

Survey teams on current pain points and workarounds

Assess data quality and governance infrastructure

Week 3-4: Quick Wins

Establish AI governance office hours with designated experts

Create simple risk assessment templates for immediate use

Set up basic monitoring for production AI systems

Document clear approval timelines for different risk categories

Days 31-60: Process and Platform Development

Week 5-6: Risk-Proportional Framework

Define clear risk categories (low, medium, high) with specific criteria

Create streamlined governance paths for each risk level

Develop self-service tools for low-risk use cases

Establish cross-functional governance review board

Week 7-8: Platform Integration

Integrate governance checkpoints into existing development workflows

Set up automated compliance validation where possible

Create governance dashboards for leadership visibility

Pilot new processes with volunteer business units

Days 61-90: Scale and Optimization

Week 9-10: Continuous Monitoring

Deploy automated monitoring for model performance and data drift

Establish alert systems for governance threshold violations

Create regular review cycles for high-risk AI applications

Build feedback loops between governance outcomes and process improvement

Week 11-12: Business Integration

Train business leaders on new governance capabilities

Measure and communicate governance ROI (faster approvals, reduced risk)

Expand successful processes across the organization

Plan for advanced governance capabilities based on lessons learned

Join the 35% That Get This Right

The gap between AI governance success and failure isn't about having the right policies: it's about building governance that works in practice. Organizations that treat governance as a business enabler rather than a compliance burden don't just avoid AI failures; they innovate faster and scale more confidently than their competitors.

The question isn't whether you need AI governance. The question is whether your governance will accelerate your AI strategy or become another reason why your best AI initiatives never make it to production.

Start with your 30-day foundation, measure progress against business outcomes, and build governance that your teams actually want to use. The 35% of companies getting this right aren't just avoiding AI disasters; they're using governance as their secret weapon for sustainable AI innovation.

This post was created by Bob Rapp, Founder aigovops foundation 2025 all rights reserved. Join our email list at https://www.aigovopsfoundation.org/ and help build a global community doing good for humans with ai - and making the world a better place to ship production ai solutions

Comments